Mel Frequency Cepstral Coefficients (MFCCs)#

import IPython.display

import essentia.standard as ess

import matplotlib.pyplot as plt

import librosa

import numpy

import sklearn

from mirdotcom import mirdotcom

mirdotcom.init()

The mel frequency cepstral coefficients (MFCCs) of a signal are a small set of features (usually about 10-20) which concisely describe the overall shape of a spectral envelope. In MIR, it is often used to describe timbre.

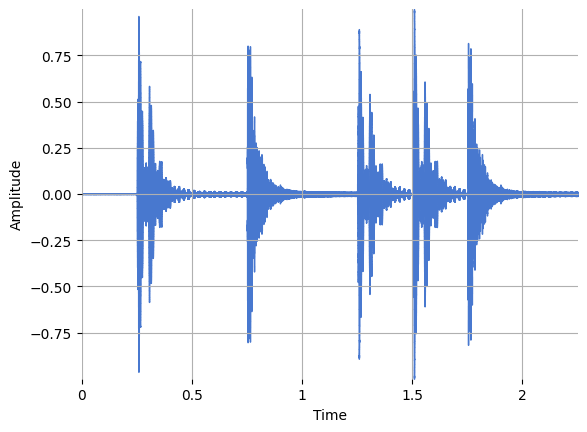

Plot the audio signal:

filename = mirdotcom.get_audio("simple_loop.wav")

x, fs = librosa.load(filename)

librosa.display.waveshow(x, sr=fs)

plt.ylabel("Amplitude")

Text(22.472222222222214, 0.5, 'Amplitude')

Play the audio:

IPython.display.Audio(x, rate=fs)

Computing MFCCs#

librosa.feature.mfcc computes MFCCs across an audio signal:

mfccs = librosa.feature.mfcc(y=x, sr=fs)

print(mfccs.shape)

(20, 97)

In this case, mfcc computed 20 MFCCs over 130 frames.

The very first MFCC, the 0th coefficient, does not convey information relevant to the overall shape of the spectrum. It only conveys a constant offset, i.e. adding a constant value to the entire spectrum. Therefore, many practitioners will discard the first MFCC when performing classification. For now, we will use the MFCCs as is.

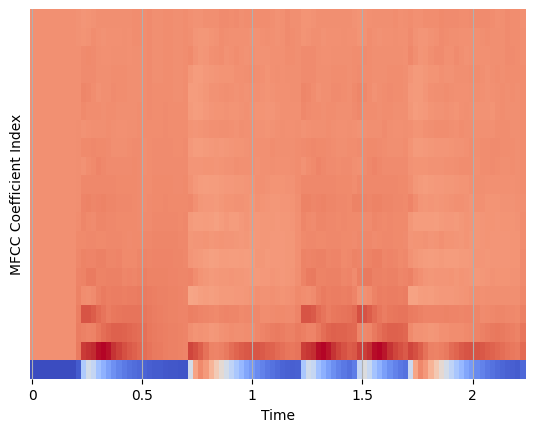

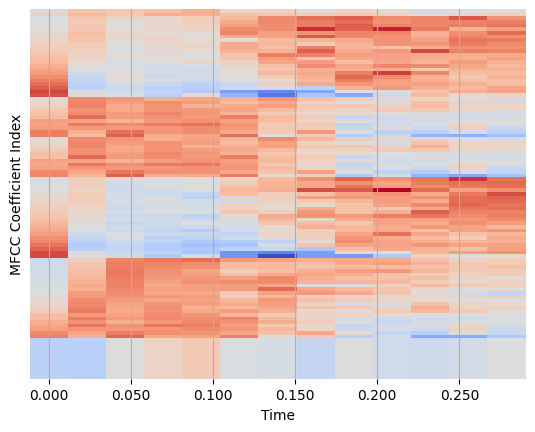

Display the MFCCs:

librosa.display.specshow(mfccs, sr=fs, x_axis="time")

plt.ylabel("MFCC Coefficients")

Text(0, 0.5, 'MFCC Coefficient Index')

Feature Scaling#

Let’s scale the MFCCs such that each coefficient dimension has zero mean and unit variance:

mfccs = sklearn.preprocessing.scale(mfccs, axis=1)

print(mfccs.mean(axis=1))

print(mfccs.var(axis=1))

[-6.7592896e-09 -5.8375682e-09 3.0724043e-09 -1.6898224e-09

-2.1506830e-09 7.6810105e-09 1.5669261e-08 1.1982377e-08

1.4747541e-08 -1.0830226e-08 -1.1675136e-08 5.2230873e-09

-6.7592896e-09 -8.6027319e-09 -4.9158468e-09 2.7651639e-09

4.3013659e-09 1.6898223e-08 -7.9882509e-09 1.2289617e-08]

[0.99999994 1. 1.0000001 0.99999994 0.9999998 1.

1.0000001 0.99999994 1. 1.0000001 1. 1.

1. 1. 1.0000001 0.99999994 1.0000001 0.99999994

1.0000001 1. ]

/home/huw-cheston/Documents/python_projects/musicinformationretrieval.com/venv/lib/python3.10/site-packages/sklearn/preprocessing/_data.py:265: UserWarning: Numerical issues were encountered when centering the data and might not be solved. Dataset may contain too large values. You may need to prescale your features.

warnings.warn(

/home/huw-cheston/Documents/python_projects/musicinformationretrieval.com/venv/lib/python3.10/site-packages/sklearn/preprocessing/_data.py:284: UserWarning: Numerical issues were encountered when scaling the data and might not be solved. The standard deviation of the data is probably very close to 0.

warnings.warn(

Display the scaled MFCCs:

librosa.display.specshow(mfccs, sr=fs, x_axis="time")

plt.ylabel("MFCC Coefficients")

(-0.5, 100.5)

Alternative Method#

We can also use essentia.standard.MFCC to compute MFCCs across a signal, and we will display them as a “MFCC-gram”:

hamming_window = ess.Windowing(type="hamming")

spectrum = ess.Spectrum() # we just want the magnitude spectrum

mfcc = ess.MFCC(numberCoefficients=13)

frame_sz = 1024

hop_sz = 500

mfccs = numpy.array(

[

mfcc(spectrum(hamming_window(frame)))[1]

for frame in ess.FrameGenerator(x, frameSize=frame_sz, hopSize=hop_sz)

]

)

print(mfccs.shape)

(101, 13)

[ INFO ] TriangularBands: input spectrum size (513) does not correspond to the "inputSize" parameter (1025). Recomputing the filter bank.

Scale the MFCCs:

mfccs = sklearn.preprocessing.scale(mfccs)

/home/huw-cheston/Documents/python_projects/musicinformationretrieval.com/venv/lib/python3.10/site-packages/sklearn/preprocessing/_data.py:265: UserWarning: Numerical issues were encountered when centering the data and might not be solved. Dataset may contain too large values. You may need to prescale your features.

warnings.warn(

/home/huw-cheston/Documents/python_projects/musicinformationretrieval.com/venv/lib/python3.10/site-packages/sklearn/preprocessing/_data.py:284: UserWarning: Numerical issues were encountered when scaling the data and might not be solved. The standard deviation of the data is probably very close to 0.

warnings.warn(

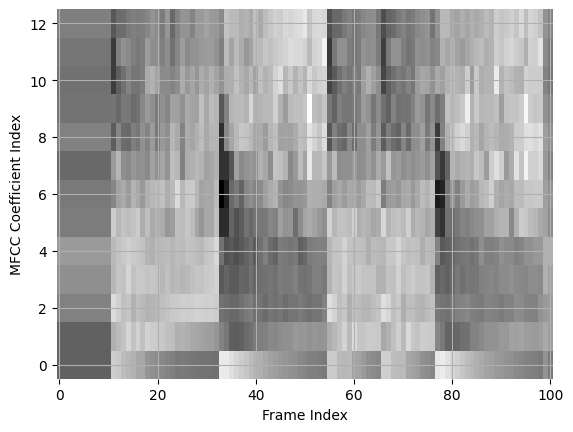

Plot the MFCCs:

plt.imshow(mfccs.T, origin="lower", aspect="auto", interpolation="nearest")

plt.ylabel("MFCC Coefficient Index")

plt.xlabel("Frame Index")

Text(0.5, 0, 'Frame Index')