Neural Networks#

import warnings

import matplotlib.pyplot as plt

import numpy

from mirdotcom import mirdotcom

warnings.filterwarnings("ignore")

mirdotcom.init()

Using TensorFlow backend.

Neural networks are a category of machine learning models which have seen a resurgence since 2006. Deep learning is the recent area of machine learning which combines many neuron layers (e.g. 20, 50, or more) to form a “deep” neural network. In doing so, a deep neural network can accomplish sophisticated classification tasks that classical machine learning models would find difficult.

Keras#

Keras is a Python package for deep learning which provides an easy-to-use layer of abstraction on top of Theano and Tensorflow.

Import Keras objects:

import keras.optimizers

from keras.models import Sequential

from keras.layers import Dense

Create a neural network architecture by layering neurons. Define the number of neurons in each layer and their activation functions:

model = Sequential()

model.add(Dense(4, activation="relu", input_dim=2))

model.add(Dense(4, activation="relu"))

model.add(Dense(2, activation="softmax"))

Choose the optimizer, i.e. the update rule that the neural network will use to train:

optimizer = keras.optimizers.SGD(momentum=0.99)

Compile the model, i.e. create the low-level code that the CPU or GPU will actually use for its calculations during training and testing:

model.compile(loss="binary_crossentropy", optimizer=optimizer)

Example: XOR#

The operation XOR is defined as: XOR(x, y) = 1 if x != y else 0

Synthesize training data for the XOR problem.

X_train = numpy.random.randn(10000, 2)

print(X_train.shape)

(10000, 2)

print(X_train[:5])

[[ 0.42128999 0.41729839]

[ 0.41792333 1.47336136]

[ 0.42125759 -2.28450677]

[ 1.08729453 0.51543248]

[-1.11614025 0.97745119]]

Create target labels for the training data.

y_train = numpy.array(

[[float(x[0] * x[1] > 0), float(x[0] * x[1] <= 0)] for x in X_train]

)

print(y_train.shape)

(10000, 2)

y_train[:5]

array([[1., 0.],

[1., 0.],

[0., 1.],

[1., 0.],

[0., 1.]])

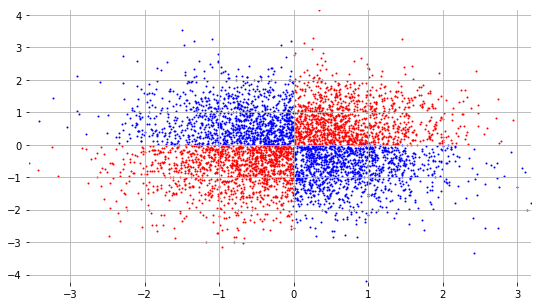

Plot the training data:

plt.figure(figsize=(9, 5))

plt.scatter(

X_train[y_train[:, 0] > 0.5, 0], X_train[y_train[:, 0] > 0.5, 1], c="r", s=1

)

plt.scatter(

X_train[y_train[:, 1] > 0.5, 0], X_train[y_train[:, 1] > 0.5, 1], c="b", s=1

)

<matplotlib.collections.PathCollection at 0x11b9c6588>

Finally, train the model!

results = model.fit(X_train, y_train, epochs=200, batch_size=100)

Epoch 1/200

10000/10000 [==============================] - 0s 27us/step - loss: 0.5036

Epoch 2/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.1147

Epoch 3/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0536

Epoch 4/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0644

Epoch 5/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.3345

Epoch 6/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.3267

Epoch 7/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.2020

Epoch 8/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.1085

Epoch 9/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0752

Epoch 10/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0495

Epoch 11/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0354

Epoch 12/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0244

Epoch 13/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0178

Epoch 14/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0162

Epoch 15/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0215

Epoch 16/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0242

Epoch 17/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0158

Epoch 18/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0152

Epoch 19/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0199

Epoch 20/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0293

Epoch 21/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0305

Epoch 22/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0210

Epoch 23/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0180

Epoch 24/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0144

Epoch 25/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0142

Epoch 26/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0134

Epoch 27/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0138

Epoch 28/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0115

Epoch 29/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0121

Epoch 30/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0121

Epoch 31/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0206

Epoch 32/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0213

Epoch 33/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0285

Epoch 34/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0229

Epoch 35/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0154

Epoch 36/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0148

Epoch 37/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0133

Epoch 38/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0142

Epoch 39/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0154

Epoch 40/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0227

Epoch 41/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0186

Epoch 42/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0282

Epoch 43/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0225

Epoch 44/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0137

Epoch 45/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0121

Epoch 46/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0103

Epoch 47/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0155

Epoch 48/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0195

Epoch 49/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0175

Epoch 50/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0145

Epoch 51/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0128

Epoch 52/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0106

Epoch 53/200

10000/10000 [==============================] - 0s 11us/step - loss: 0.0099

Epoch 54/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0096

Epoch 55/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0093

Epoch 56/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0089

Epoch 57/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0088

Epoch 58/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0086

Epoch 59/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0119

Epoch 60/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0109

Epoch 61/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0111

Epoch 62/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0185

Epoch 63/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0139

Epoch 64/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0132

Epoch 65/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0105

Epoch 66/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0105

Epoch 67/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0119

Epoch 68/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0106

Epoch 69/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0102

Epoch 70/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0088

Epoch 71/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0084

Epoch 72/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0103

Epoch 73/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0114

Epoch 74/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0110

Epoch 75/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0095

Epoch 76/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0116

Epoch 77/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0087

Epoch 78/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0087

Epoch 79/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0080

Epoch 80/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0105

Epoch 81/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0092

Epoch 82/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0088

Epoch 83/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0086

Epoch 84/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0087

Epoch 85/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0087

Epoch 86/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0090

Epoch 87/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0069

Epoch 88/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0076

Epoch 89/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0082

Epoch 90/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0081

Epoch 91/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0081

Epoch 92/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0076

Epoch 93/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0082

Epoch 94/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0072

Epoch 95/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0072

Epoch 96/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0090

Epoch 97/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0073

Epoch 98/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0071

Epoch 99/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0068

Epoch 100/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0075

Epoch 101/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0070

Epoch 102/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0079

Epoch 103/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0078

Epoch 104/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0074

Epoch 105/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0067

Epoch 106/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0066

Epoch 107/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0072

Epoch 108/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0085

Epoch 109/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0070

Epoch 110/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0072

Epoch 111/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0083

Epoch 112/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0085

Epoch 113/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0073

Epoch 114/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0087

Epoch 115/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0097

Epoch 116/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0068

Epoch 117/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0067

Epoch 118/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0078

Epoch 119/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0086

Epoch 120/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0070

Epoch 121/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0063

Epoch 122/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0067

Epoch 123/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0073

Epoch 124/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0084

Epoch 125/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0087

Epoch 126/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0087

Epoch 127/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0092

Epoch 128/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0070

Epoch 129/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0064

Epoch 130/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0058

Epoch 131/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0062

Epoch 132/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0097

Epoch 133/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0105

Epoch 134/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0081

Epoch 135/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0087

Epoch 136/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0068

Epoch 137/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0063

Epoch 138/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0062

Epoch 139/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0060

Epoch 140/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0063

Epoch 141/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0071

Epoch 142/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0068

Epoch 143/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0062

Epoch 144/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0058

Epoch 145/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0059

Epoch 146/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0058

Epoch 147/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0060

Epoch 148/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0061

Epoch 149/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0062

Epoch 150/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0058

Epoch 151/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0056

Epoch 152/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0057

Epoch 153/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0057

Epoch 154/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0056

Epoch 155/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0071

Epoch 156/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0062

Epoch 157/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0073

Epoch 158/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0064

Epoch 159/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0069

Epoch 160/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0069

Epoch 161/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0058

Epoch 162/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0057

Epoch 163/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0057

Epoch 164/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0061

Epoch 165/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0068

Epoch 166/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0062

Epoch 167/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0065

Epoch 168/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0064

Epoch 169/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0072

Epoch 170/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0056

Epoch 171/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0055

Epoch 172/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0065

Epoch 173/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0062

Epoch 174/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0053

Epoch 175/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0055

Epoch 176/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0056

Epoch 177/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0080

Epoch 178/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0080

Epoch 179/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0059

Epoch 180/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0058

Epoch 181/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0064

Epoch 182/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0066

Epoch 183/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0057

Epoch 184/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0056

Epoch 185/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0056

Epoch 186/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0060

Epoch 187/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0059

Epoch 188/200

10000/10000 [==============================] - 0s 10us/step - loss: 0.0054

Epoch 189/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0057

Epoch 190/200

10000/10000 [==============================] - 0s 8us/step - loss: 0.0072

Epoch 191/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0060

Epoch 192/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0057

Epoch 193/200

10000/10000 [==============================] - 0s 8us/step - loss: 0.0057

Epoch 194/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0056

Epoch 195/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0052

Epoch 196/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0054

Epoch 197/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0058

Epoch 198/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0059

Epoch 199/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0061

Epoch 200/200

10000/10000 [==============================] - 0s 9us/step - loss: 0.0074

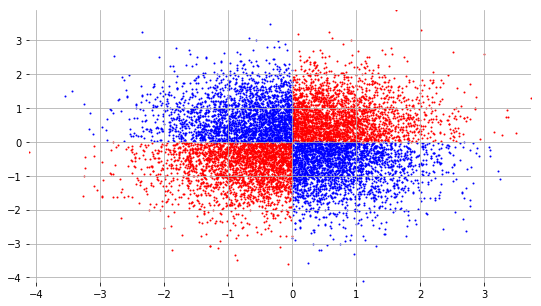

Plot the loss function as a function of the training iteration number:

plt.plot(results.history["loss"])

[<matplotlib.lines.Line2D at 0x11c00a518>]

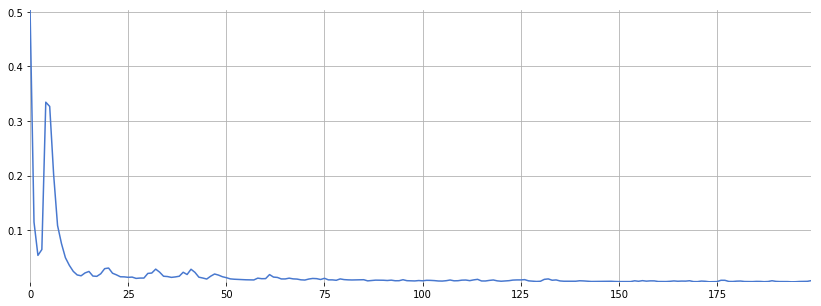

Create test data:

X_test = numpy.random.randn(5000, 2)

Use the trained neural network to make predictions from the test data:

y_test = model.predict(X_test)

y_test.shape

(5000, 2)

Let’s see if it worked:

plt.figure(figsize=(9, 5))

plt.scatter(X_test[y_test[:, 0] > 0.5, 0], X_test[y_test[:, 0] > 0.5, 1], c="r", s=1)

plt.scatter(X_test[y_test[:, 1] > 0.5, 0], X_test[y_test[:, 1] > 0.5, 1], c="b", s=1)

<matplotlib.collections.PathCollection at 0x11be77d68>