Tempo Estimation#

import IPython.display as ipd

import matplotlib.pyplot as plt

import librosa.display

import numpy

from mirdotcom import mirdotcom

mirdotcom.init()

Tempo (Wikipedia) refers to the speed of a musical piece. More precisely, tempo refers to the rate of the musical beat and is given by the reciprocal of the beat period. Tempo is often defined in units of beats per minute (BPM).

In classical music, common tempo markings include grave, largo, lento, adagio, andante, moderato, allegro, vivace, and presto. See Basic tempo markings for more.

Tempogram#

Tempo can vary locally within a piece. Therefore, we introduce the tempogram (FMP, p. 317) as a feature matrix which indicates the prevalence of certain tempi at each moment in time.

Fourier Tempogram#

The Fourier Tempogram (FMP, p. 319) is basically the magnitude spectrogram of the novelty function.

Load an audio file:

filename = mirdotcom.get_audio("58bpm.wav")

x, sr = librosa.load(filename)

ipd.Audio(x, rate=sr)

The tempo of this excerpt is about 58/116 BPM.

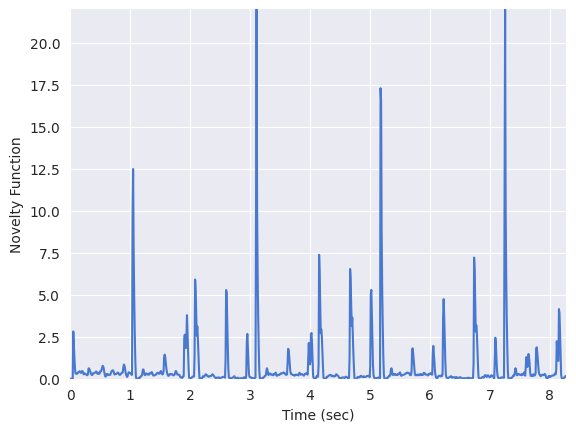

Compute the onset envelope, i.e. novelty function:

hop_length = 200 # samples per frame

onset_env = librosa.onset.onset_strength(y=x, sr=sr, hop_length=hop_length, n_fft=2048)

Plot the onset envelope:

frames = range(len(onset_env))

t = librosa.frames_to_time(frames, sr=sr, hop_length=hop_length)

plt.plot(t, onset_env)

plt.xlim(0, t.max())

plt.ylim(0)

plt.xlabel("Time (sec)")

plt.ylabel("Novelty Function")

Text(0, 0.5, 'Novelty Function')

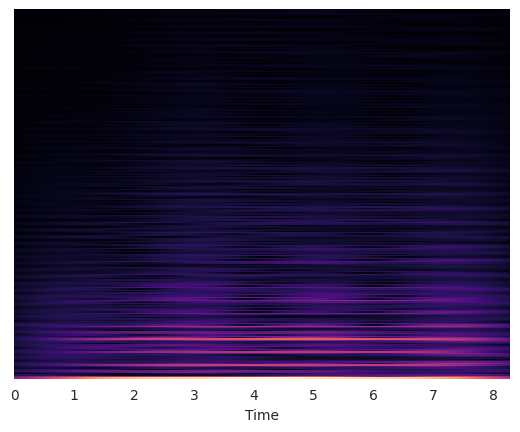

Compute the short-time Fourier transform (STFT) of the novelty function. Since the novelty function is computed in frame increments, the hop length of this STFT should be pretty small:

S = librosa.stft(onset_env, hop_length=1, n_fft=512)

fourier_tempogram = numpy.absolute(S)

Plot the Fourier tempogram:

librosa.display.specshow(fourier_tempogram, sr=sr, hop_length=hop_length, x_axis="time")

<matplotlib.collections.QuadMesh at 0x718fc22bf3a0>

Autocorrelation Tempogram#

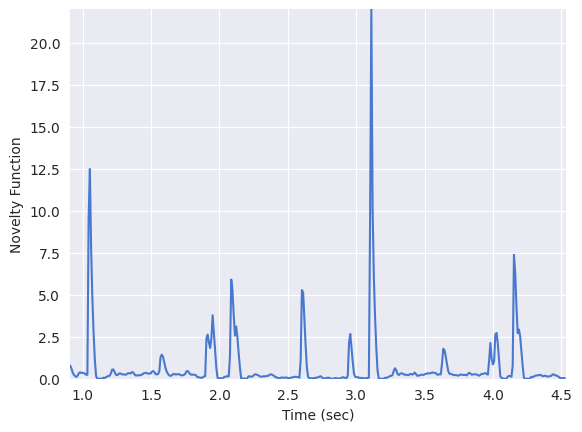

Consider a segment from the above novelty function:

n0 = 100

n1 = 500

plt.plot(t[n0:n1], onset_env[n0:n1])

plt.xlim(t[n0], t[n1])

plt.xlabel("Time (sec)")

plt.ylabel("Novelty Function")

Text(0, 0.5, 'Novelty Function')

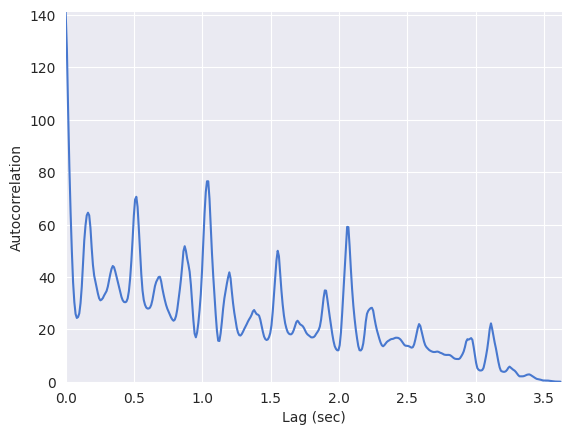

Plot the autocorrelation of this segment:

tmp = numpy.log1p(onset_env[n0:n1])

r = librosa.autocorrelate(tmp)

plt.plot(t[: n1 - n0], r)

plt.xlim(t[0], t[n1 - n0])

plt.xlabel("Lag (sec)")

plt.ylabel("Autocorrelation")

plt.ylim(0)

(0.0, 141.1080322265625)

Wherever the autocorrelation is high is a good candidate of the beat period.

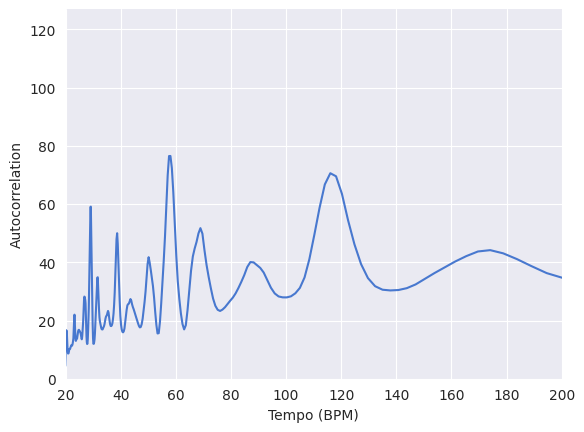

plt.plot(60 / t[: n1 - n0], r)

plt.xlim(20, 200)

plt.xlabel("Tempo (BPM)")

plt.ylabel("Autocorrelation")

plt.ylim(0)

/tmp/ipykernel_27265/2294580415.py:1: RuntimeWarning: divide by zero encountered in divide

plt.plot(60/t[:n1-n0], r)

(0.0, 126.99512481689453)

We will apply this principle of autocorrelation to estimate the tempo at every segment in the novelty function.

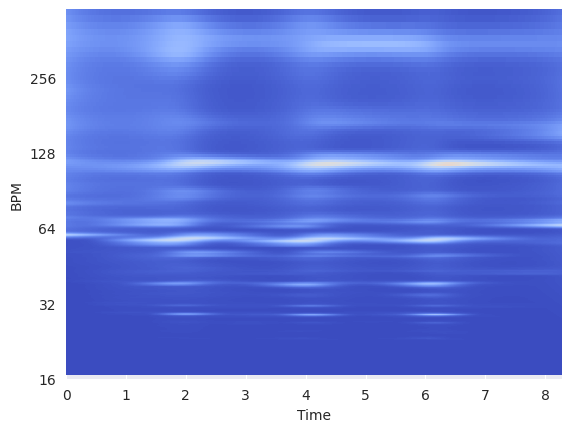

librosa.feature.tempogram implements an autocorrelation tempogram, a short-time autocorrelation of the (spectral) novelty function.

For more information:

Compute a tempogram:

tempogram = librosa.feature.tempogram(

onset_envelope=onset_env, sr=sr, hop_length=hop_length, win_length=400

)

librosa.display.specshow(

tempogram, sr=sr, hop_length=hop_length, x_axis="time", y_axis="tempo"

)

<matplotlib.collections.QuadMesh at 0x718fc20e2c80>

Estimating Global Tempo#

We will use librosa.beat.tempo to estimate the global tempo in an audio file.

Estimate the tempo:

_, tempo = librosa.beat.beat_track(y=x, sr=sr)

print(tempo)

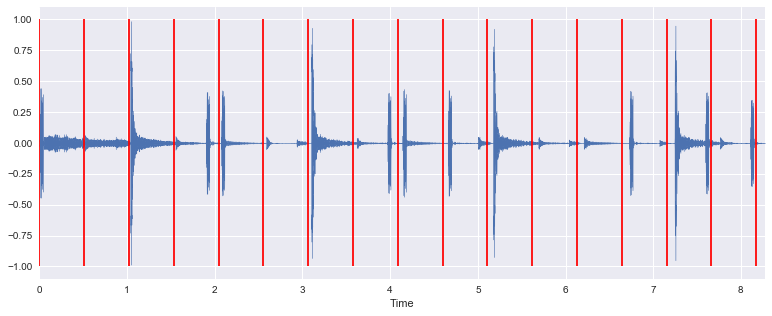

Visualize the tempo estimate on top of the input signal:

T = len(x) / float(sr)

seconds_per_beat = 60.0 / tempo[0]

beat_times = numpy.arange(0, T, seconds_per_beat)

librosa.display.waveshow(x)

plt.vlines(beat_times, -1, 1, color="r")

plt.ylabel("Amplitude")

<matplotlib.collections.LineCollection at 0x121282710>

Listen to the input signal with a click track using the tempo estimate:

clicks = librosa.clicks(times=beat_times, sr=sr, length=len(x))

ipd.Audio(x + clicks, rate=sr)