Basic Feature Extraction#

Somehow, we must extract the characteristics of our audio signal that are most relevant to the problem we are trying to solve. For example, if we want to classify instruments by timbre, we will want features that distinguish sounds by their timbre and not their pitch. If we want to perform pitch detection, we want features that distinguish pitch and not timbre.

This process is known as feature extraction.

from pathlib import Path

import matplotlib.pyplot as plt

import librosa

import librosa.display

import numpy

import sklearn

from mirdotcom import mirdotcom

mirdotcom.init()

Let’s begin with twenty audio files: ten kick drum samples, and ten snare drum samples. Each audio file contains one drum hit.

Read and store each signal:

kick_signals = [

librosa.load(p)[0]

for p in Path().glob(mirdotcom.AUDIO_DIRECTORY + "/drum_samples/train/kick_*.mp3")

]

snare_signals = [

librosa.load(p)[0]

for p in Path().glob(mirdotcom.AUDIO_DIRECTORY + "/drum_samples/train/snare_*.mp3")

]

len(kick_signals)

10

len(snare_signals)

10

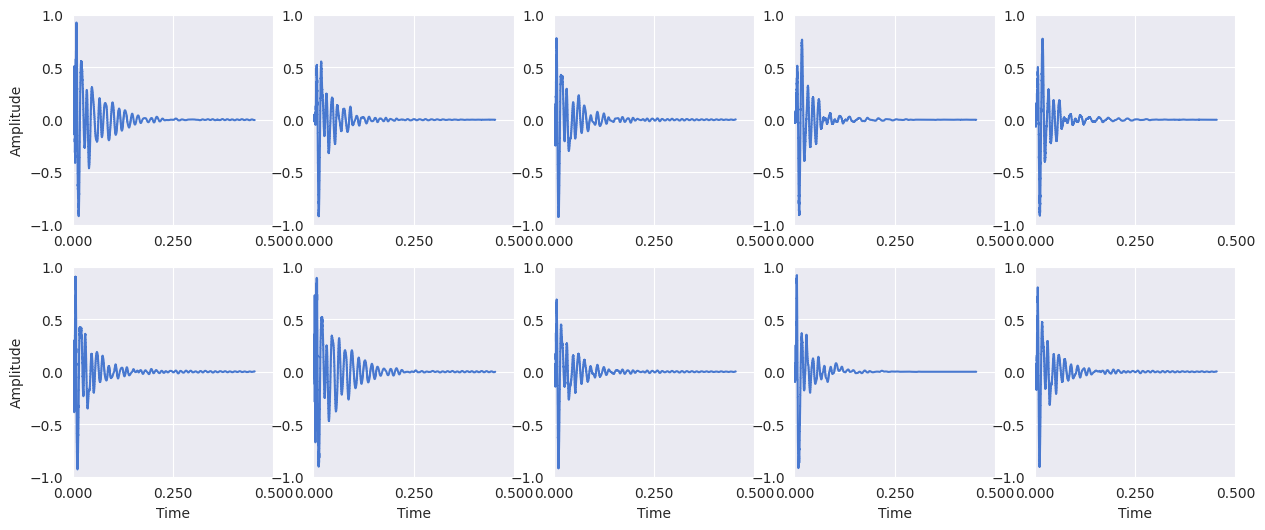

Display the kick drum signals:

plt.figure(figsize=(15, 6))

for i, x in enumerate(kick_signals):

plt.subplot(2, 5, i + 1)

librosa.display.waveshow(x[:10000])

# Y-axis label only on the first column

if i == 0 or i == 5:

plt.ylabel("Amplitude")

# X-axis label only on the bottom row

if i >= 5:

plt.xlabel("Time")

else:

plt.xlabel("")

plt.gca().set_xticks([0, 0.25, 0.5])

plt.ylim(-1, 1)

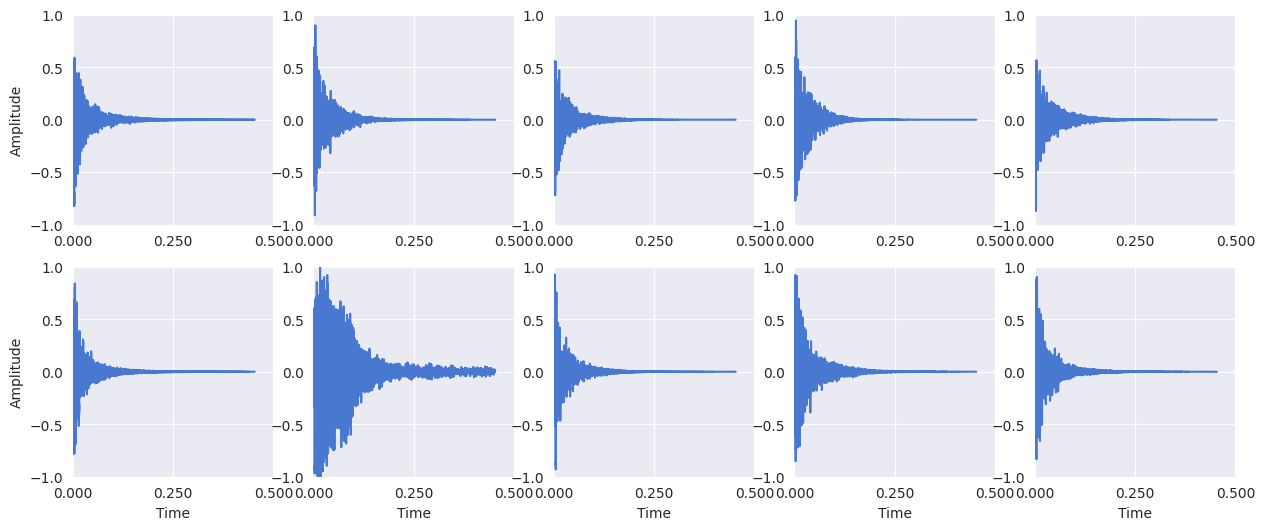

Display the snare drum signals:

plt.figure(figsize=(15, 6))

for i, x in enumerate(snare_signals):

plt.subplot(2, 5, i + 1)

librosa.display.waveshow(x[:10000])

# Y-axis label only on the first column

if i == 0 or i == 5:

plt.ylabel("Amplitude")

# X-axis label only on the bottom row

if i >= 5:

plt.xlabel("Time")

else:

plt.xlabel("")

plt.gca().set_xticks([0, 0.25, 0.5])

plt.ylim(-1, 1)

Constructing a Feature Vector#

A feature vector is simply a collection of features. Here is a simple function that constructs a two-dimensional feature vector from a signal:

def extract_features(signal):

return [

librosa.feature.zero_crossing_rate(y=signal)[0, 0],

librosa.feature.spectral_centroid(y=signal)[0, 0],

]

If we want to aggregate all of the feature vectors among signals in a collection, we can use a list comprehension as follows:

kick_features = numpy.array([extract_features(x) for x in kick_signals])

snare_features = numpy.array([extract_features(x) for x in snare_signals])

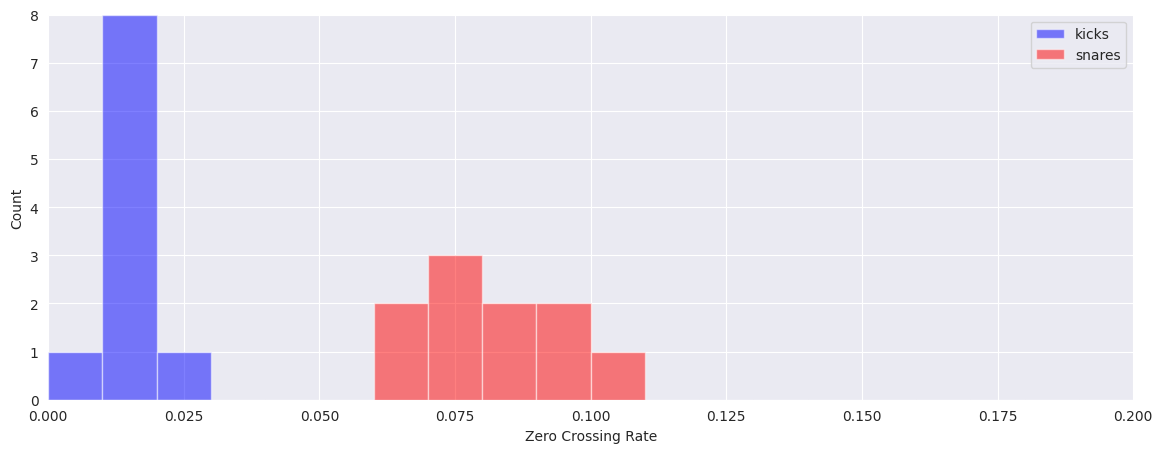

Visualize the differences in features by plotting separate histograms for each of the classes:

plt.figure(figsize=(14, 5))

plt.hist(kick_features[:, 0], color="b", range=(0, 0.2), alpha=0.5, bins=20)

plt.hist(snare_features[:, 0], color="r", range=(0, 0.2), alpha=0.5, bins=20)

plt.legend(("kicks", "snares"))

plt.xlabel("Zero Crossing Rate")

plt.ylabel("Count")

Text(0, 0.5, 'Count')

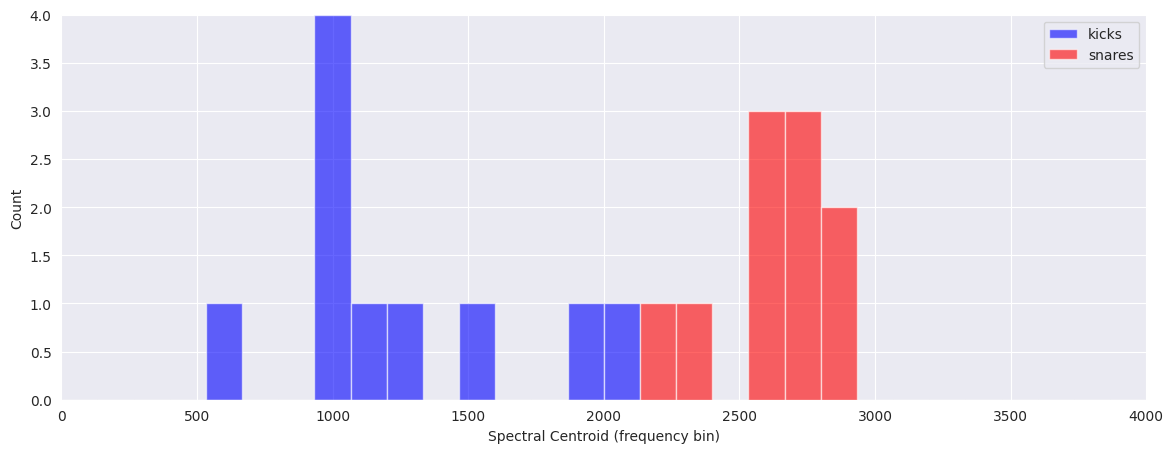

plt.figure(figsize=(14, 5))

plt.hist(kick_features[:, 1], color="b", range=(0, 4000), bins=30, alpha=0.6)

plt.hist(snare_features[:, 1], color="r", range=(0, 4000), bins=30, alpha=0.6)

plt.legend(("kicks", "snares"))

plt.xlabel("Spectral Centroid (frequency bin)")

plt.ylabel("Count")

Text(0, 0.5, 'Count')

Feature Scaling#

The features that we used in the previous example included zero crossing rate and spectral centroid. These two features are expressed using different units. This discrepancy can pose problems when performing classification later. Therefore, we will normalize each feature vector to a common range and store the normalization parameters for later use.

Many techniques exist for scaling your features. For now, we’ll use sklearn.preprocessing.MinMaxScaler. MinMaxScaler returns an array of scaled values such that each feature dimension is in the range -1 to 1.

Let’s concatenate all of our feature vectors into one feature table:

feature_table = numpy.vstack((kick_features, snare_features))

print(feature_table.shape)

(20, 2)

Scale each feature dimension to be in the range -1 to 1:

scaler = sklearn.preprocessing.MinMaxScaler(feature_range=(-1, 1))

training_features = scaler.fit_transform(feature_table)

print(training_features.min(axis=0))

print(training_features.max(axis=0))

[-1. -1.]

[1. 1.]

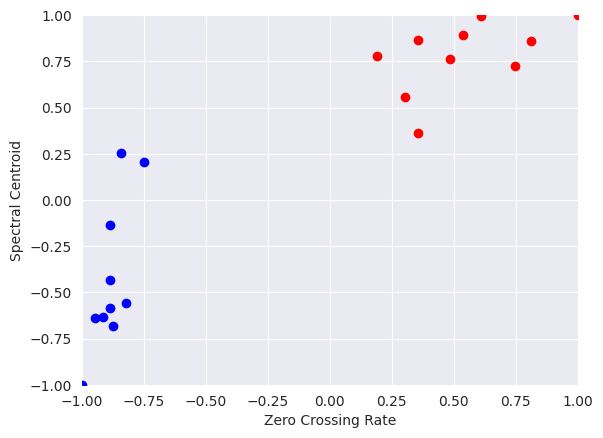

Plot the scaled features:

plt.scatter(training_features[:10, 0], training_features[:10, 1], c="b")

plt.scatter(training_features[10:, 0], training_features[10:, 1], c="r")

plt.xlabel("Zero Crossing Rate")

plt.ylabel("Spectral Centroid")

Text(0, 0.5, 'Spectral Centroid')